23-10-2014 door: Patrick Diks

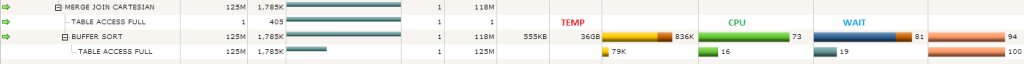

Last week I was called in on a performance issue regarding a query on a datawarehouse that took about 4 hours. When looking at the execution plan in the excellent sql monitor I noticed a big full table scan yielding a whopping 125 million rows. Before I could turn around to ask the application team why this table was not partitioned my eyes were drawn to the cpu usage and wait bars in the plan. The buffer sort on the result of the full table scan was taking far more time than the scan itself!

I have seen this phenomenon a long time ago and I wouldn’t have thought this issue would still be around in the 11g r2 database.

The culprit in this is the cartesian join. The query contained a cartesian join to a calendar table which was filtered on a single day. The result in this case is that the 125 million rows are put in a sort area for the buffer sort. The sort area is way to small to hold these rows and so we end up doing this on disk using temp.

The approach the optimizer took is probably to prevent the full table to be repeated in case the cardinality estimate on the calendar was off, but the result is pretty dramatic. When I forced the use of a nested loops join the buffer sort was gone. What is left is that in 11g r2 the optimizer apparently still doesn’t have the capability to forsee the cost of the buffer sort disk operation.

For now there doesn’t seem to be an elegant way to work around this, maybe the adaptive query optimisation of 12c will resolve this in a better way.